Microsoft Copilot: Understanding Security Risks

Part of: AI Learning Series Here

Quick Links: Resources for Learning AI | Keep up with AI | List of AI Tools

Subscribe to JorgeTechBits newsletter

There is a lot of misconceptions / questions about the security around the Microsoft Copilot products. understandably, it is usually one of the first question that comes-up when companies are considering Copilot use within their organizations. (the elephant in the room!)

Let’s quickly put some misconceptions to bed right away:

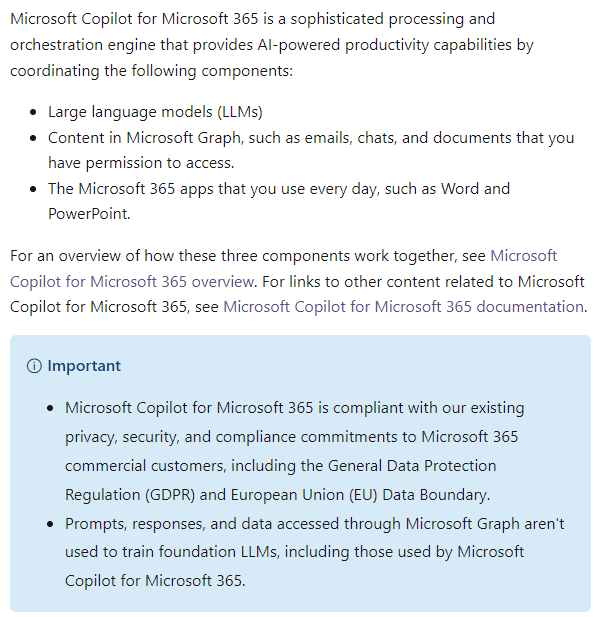

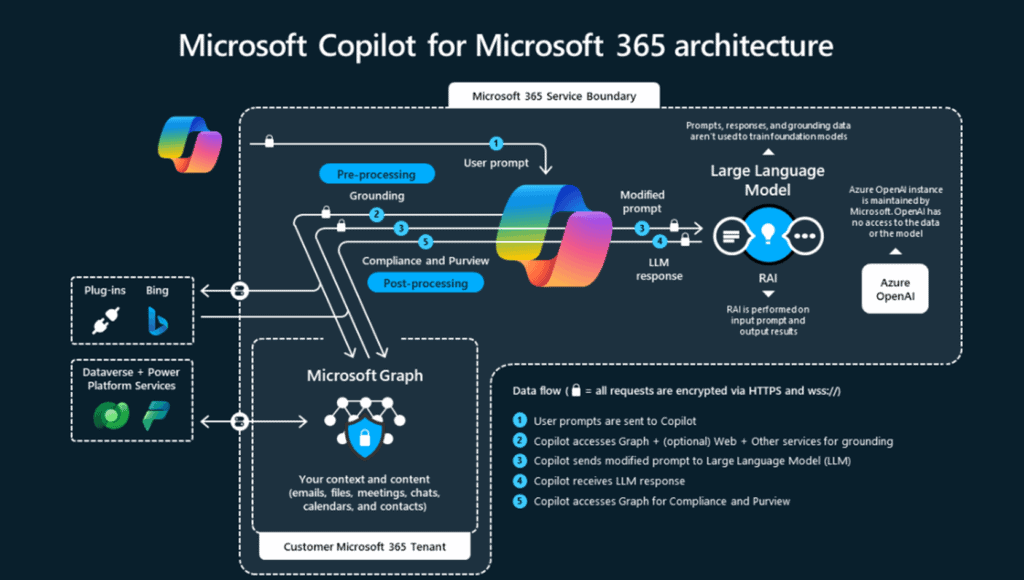

- Your prompts, responses and data ARE NOT used to train LLMs.

- When using Copilot Pro, Copilot for M365 your data / prompts DO NOT leave your tenant.

- Copilot respects user permissions (requester) from Tenant’s Microsoft Graph

- A notable exception at the moment: plugins and extensions! (more on this later)

- Microsoft Copilot for Microsoft 365 is GDPR compliant and adheres to existing privacy, security, and compliance commitments.

Per Microsoft Documentation:

Making sure your data is secure!

Anyone implementing Copilot for Microsoft 365 needs to prevent unexpected data leakage.

A few best practices / steps include

- Ensure your organization’s data security and governance systems and strategy is in place

- Identify what data is important

- Determine who should have access to data

- Implement a classification and data labeling system

- Implement basic retention policies to ensure data quality.

- Develop a cadence to regularly review and clean up your data to maintain accuracy and relevance.

Data Classification and Sensitivity Labels

Copilot uses existing controls to ensure that data stored in your tenant is never returned to the user or used by a large language model (LLM) if the user doesn’t have access to that data. When the data has sensitivity labels from your organization applied to the content, there’s an extra layer of protection:

- When a file is open in Word, Excel, PowerPoint, or similarly an email or calendar event is open Outlook, the sensitivity of the data is displayed to users in the app with the label name and content markings (such as header or footer text) that have been configured for the label.

- When the sensitivity label applies encryption, users must have the EXTRACT usage right, as well as VIEW, for Copilot to return the data.

- This protection extends to data stored outside your Microsoft 365 tenant when it’s open in an Office app (data in use). For example, local storage, network shares, and cloud storage.

More on this topic at: Microsoft Purview data security and compliance protections for Microsoft Copilot | Microsoft Learn

Recommended Videos to Learn From

A couple of recent videos that explain how to security works follow:

This one is GREAT (whiteboard style) explanation of the security risks and how data protection works along with mapping to CIS controls. — Kudos to T-Minus365!

This video, By Steven Rodriguez, more geared towards security practitioners), to help start the conversation around LLM Security organizations. Kudos to Steven!

Understanding the new Semantic Index

A semantic index uses vectorized indices to build a conceptual map of data by linking it together in meaningful ways, much like the human brain does. It uses information such as keywords and personalization, and social matching capabilities that are already built into Microsoft 365 to make connections between separate pieces of information.

The Semantic Index for Copilot in Microsoft 365 redefines data retrieval, leveraging Microsoft Graph for user-specific information. With a dual-tiered strategy, it indexes SharePoint data and creates individual user indexes for email and key documents. Correlating signals and retrieving uniquely relevant data ensures maximum relevance. Combining user prompts and retrieved information, Copilot drives personalized responses through the large language model. This dynamic process tailors AI-generated results to each user’s explicit information access, delivering a uniquely efficient user.

The Copilot Semantic Index is not just an incremental update; it represents a paradigm shift in how data is indexed and searched. A semantic index uses vectorized indices to move search beyond the limitations of traditional keyword-based searches, enabling a conceptual understanding of the content. The Copilot Semantic Index allows Microsoft 365 to grasp the essence of the data, facilitating searches that are more aligned with human thought processes and natural language queries.

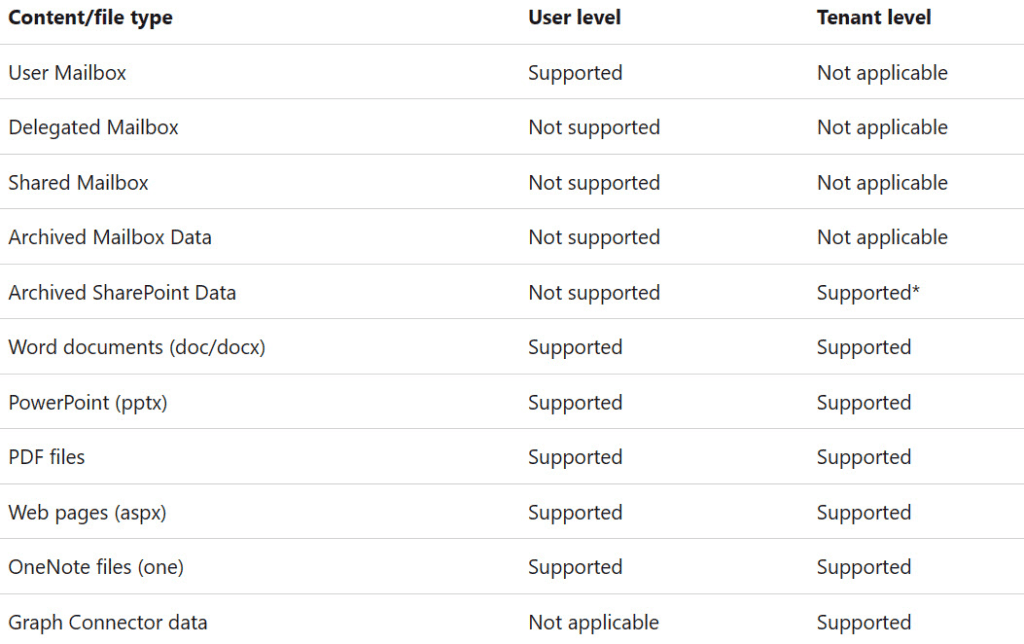

Here is the current list of supported file types for the user-level index and tenant-level index that Copilot works with:

Resources:

- Data, Privacy, and Security for Microsoft Copilot for Microsoft 365 | Microsoft Learn

- Apply principles of Zero Trust to Microsoft Copilot for Microsoft 365 | Microsoft Learn

- Microsoft Purview data security and compliance protections for Microsoft Copilot | Microsoft Learn

- Copilot in Bing: Our approach to Responsible AI – Microsoft Support

- How Microsoft Copilot for Security works (youtube.com)

- Microsoft 365 Copilot: Security & Privacy (youtube.com)

- (Copilot is covered under the general terms of service section of the Microsoft Services Terms and Conditions Agreement