Diffusion LLMs: Are they The Next Wave ?

Part of: AI Learning Series Here

Quick Links: Resources for Learning AI | Keep up with AI | List of AI Tools

Subscribe to JorgeTechBits newsletter

Over the past couple of years, we have witnessed an incredible evolution in artificial intelligence. From simple chatbots to sophisticated language models that can write, translate, and even generate new content including software! The progress has been nothing short of breathtaking, and as soon as you know something, it changes or there is some new advance that leaves wanting to know more! Many of us are still out there getting use to the simple chatbots, but the progress has not stopped!

A fascinating new approach is emerging, promising to redefine how we interact with AI: Diffusion Language Models (LLMs).

Beyond Sequential Generation: A New Paradigm

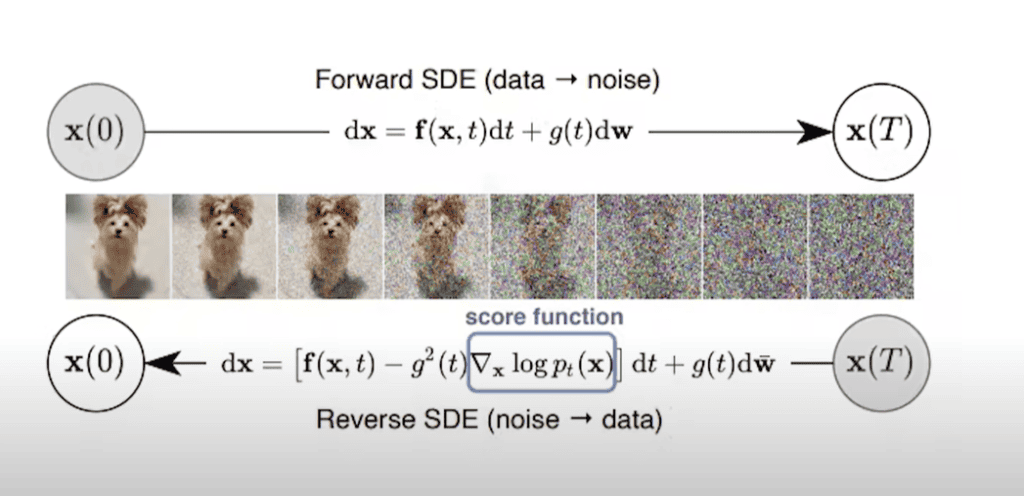

Traditional LLMs, as remarkable as they are, operate by generating text sequentially, one word or token at a time. Imagine building a sentence brick by brick. Diffusion LLMs, however, take a radically different approach. They aim to produce the entire response in a single, powerful burst. This isn’t just a minor tweak; it’s a fundamental shift in how language models function.

The Magic of Iterative Refinement

How do they achieve this? By embracing a process of iterative refinement, akin to how artists sculpt or refine a piece of work. Diffusion LLMs begin with a rough, preliminary draft, a sort of “noisy” version of the final output. Then, through a series of meticulous steps, they progressively refine this draft, gradually transforming it into a coherent and polished response. Think of it like starting with a blurry image and gradually sharpening it until a clear picture emerges. Iterative is how humans work!

Why This Matters: The Exciting Advantages

This innovative approach unlocks a range of compelling advantages:

- Blazing Speed: Imagine getting answers almost instantly. Diffusion LLMs have the potential to deliver responses significantly faster than their traditional counterparts, making interactions more fluid and efficient.

- Enhanced Reasoning and Structure: By generating the entire output at once, these models can take a holistic view of the response, leading to more coherent and well-structured text. They’re not limited by the constraints of sequential generation.

- Improved Accuracy: The iterative refinement process allows for built-in error correction, helping to mitigate the “hallucinations” that can sometimes plague LLMs.

- Greater Control: This method allows for more nuanced control over the generated text, enabling us to guide the model towards specific objectives and ensure safety and alignment.

- Efficiency for Edge Computing: The potential for smaller and more efficient models opens doors for deploying powerful AI on devices with limited resources, bringing advanced capabilities to our everyday lives.

This is exiting!

The development of Diffusion LLMs marks a significant leap forward in the field of artificial intelligence. It’s a testament to the relentless innovation that drives this field, constantly pushing the boundaries of what’s possible. As researchers and startups continue to explore and refine these models, we can expect to see even more groundbreaking applications that will transform how we interact with technology and the world around us. The age of intelligent machines is upon us, and the possibilities are truly limitless!

This is interesting as a first large diffusion-based LLM.

— Andrej Karpathy (@karpathy) February 27, 2025

Most of the LLMs you've been seeing are ~clones as far as the core modeling approach goes. They're all trained "autoregressively", i.e. predicting tokens from left to right. Diffusion is different – it doesn't go left to… https://t.co/I0gnRKkh9k